Publications

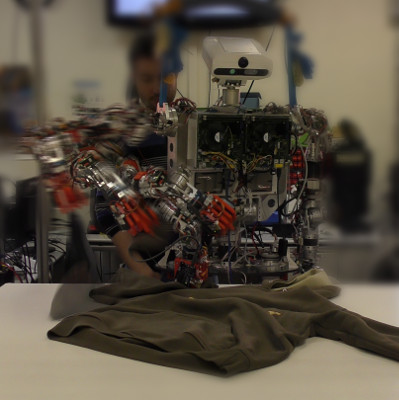

Improving and Evaluating Robotic Garment Unfolding: A Garment-Agnostic Approach

David Estevez, Raul Fernandez-Fernandez, Juan G. Victores, Carlos Balaguer

Abstract

Current approaches for robotic garment folding require a full view of an extended garment, in order to successfully apply a model-based folding sequence. In this paper, we present a garment-agnostic algorithm that requires no model to unfold clothes and works using only depth data. Once the garment is unfolded, state of the art approaches for folding may be applied. The algorithm presented is divided into 3 main stages. First, a Segmentation stage extracts the garment data from the background, and approximates its contour into a polygon. Then, a Clustering stage groups regions of similar height within the garment, corresponding to different overlapped regions. Finally, a Pick and Place Points stage finds the most suitable points for grasping and releasing the garment for the unfolding process, based on a bumpiness value defined as the accumulated difference in height along selected candidate paths. Experiments for evaluation of the vision algorithm have been performed over a dataset of 30 samples from a total of 6 different garment categories with one and two folds. The whole unfolding algorithm has also been validated through experiments with an industrial robot platform over a subset of the dataset garments.

[paper] [slides] [bibtex] [source code]

Future Trends in Perception and Manipulation for Unfolding and Folding Garments

Abstract

This paper presents current approaches for robotic garment folding-oriented 3D deformable object perception and manipulation. A major portion of these approaches are based on 3D perception algorithms that match garments to a model, and are thus model-based. They require a full view of an extended garment, in order to then apply a preprogrammed folding sequence. Other approaches are based on 3D manipulation algorithms that are focused on modifying the pose of the garment, also oriented at matching it with a model. We present our own garment-agnostic algorithm, which requires no model to unfold clothes, and works using a single view from an RGB-D sensor. The unfolding algorithm also has been validated through experiments using a garment dataset of RGB-D sensor data, and additional validation with a humanoid robot platform. Finally, conclusions regarding the current state of the art and on the future trends of these research lines are discussed.

[paper] [slides] [bibtex] [source code]

Towards Robotic Garment Folding: A Vision Approach for Fold Detection

David Estevez, Juan G. Victores, Santiago Morante, Carlos Balaguer

Abstract

Folding clothes is a current trend in robotics. Previously to folding clothes, they have to be unfolded. It is not realistic to perform model-based unfolding, as every garment has a different shape, size, color, texture, etc. In this paper we present a garment-agnostic algorithm to unfold clothes that works using 3D sensor information. The depth information provided by the sensor is converted into a grayscale image. This image is segmented using watershed algorithm. This algorithm provide us with labeled regions, each having a different height. In this labeled image, we assume that the highest height region belongs to the fold. Starting on this region, and ending in the garment border, tentative paths are created in several directions to analyze the height profile. For each profile, a bumpiness value is computed, and the lowest one is selected as the unfolding direction. A final extension on this line is performed to create a pick point on the fold border, and a place point outside the garment. The proposed algorithm is tested with a small set of clothes in different positions.

[paper] [poster] [bibtex] [source code]